Building a global reverse proxy with on-demand SSL support

...or: How and why to launch your first EC2 instances when you're full-on Serverless at any other day in the year

Motivation#

Who needs a reverse proxy with on-demand SSL support? Well, think about services as Hashnode, which also runs this blog, or Fathom and SimpleAnalytics. A feature that all those services have in common? They all are enabling their customers to bring their own, custom domain names. The latter two services use them to bypass adblockers, with the intention that customers can track all their pageviews and events, which potentially wouldn't be possible otherwise because the service's own domain are prone to DNS block lists. Hashnode is using them to enable their customers to host their blogs under their own domain names.

Requirements#

What are the functional & non-functional requirements to build such a system? Let's try to recap:

We want to be able to use a custom ("external") domain, e.g.

subdomain.customdomain.tldto redirect to another domain, such astargetdomain.tldvia aCNAMEDNS recordThe custom domains need to have SSL/TLS support

The custom domains need to be configurable, without changing the underlying infrastructure

We want to make sure that the service will only create and provide the certificates for whitelisted custom domains

We want to optimize the latency of individual requests, thus need to support a scalable and distributed infrastructure

We want to be as Serverless as possible

We want to optimize for infrastructure costs (variable and fixed)

We want to build this on AWS

The service needs to be deployable/updateable/removable via Infrastructure as Code (IaC) in a repeatable manner

Architectural considerations#

Looking at the above requirements, what are the implications from an architectural point of view? Which tools and services are already on the market? What does AWS as Public Cloud Provider offer for our use case?

For the main requirements of a reverse proxy server with automatic SSL/TLS support, Caddy seems to be an optimal candidate. As it is written in Go, it can run in Docker containers very well and can be used on numerous operating systems. This means we have the options to either run it on EC2 instances or ECS/Fargate if we decide to run it in containers. The latter would cater to the requirement to run as Serverless as possible. It has modules to store the generated SSL/TLS on-demand certificates in DynamoDB or S3.

Also, the whitelisting of custom domains is possible for those certificates, by providing an additional backend service, which Caddy can ask whether a requested custom domain is allowed to use or not.

A challenge is that none of those modules are contained in the official Caddy builds, meaning that we'd have to build a custom version of Caddy to be able to use those storage backends.

Regarding the requirement of global availability and short response times, AWS Global Accelerator is a viable option, as it can provide a global single static IP address endpoint for multiple, regionally distributed services. In our use case, those services would be our Caddy installations.

Running Caddy itself, as said before, is possible via Containers or EC2 instances / VMs. As the services will run the whole time and assumingly don't need a lot of resources if not under heavy load, we assume that 1 vCPU and 1 GB of memory should be enough.

When projecting this on the necessary infrastructure, the cost comparison between Containers and VMs looks like the following (for simplification, we just compare the fixed costs, ignore variable costs such as egress traffic, and assume the us-east-1 region is used):

Containers

Fargate task with 1 vCPU and 1 GB of memory for each Caddy regional instance

- Price: ($0.04048/vCPU hour + $0.004445/GB hour) * 720 hours (30 days) = $32.35 / 30 days

ALB to make the Fargate tasks available to the outside world

- Price: ($0.0225 per ALB-hour + $0.008 per LCU-hour) * 720 hours (30 days) = $21.96 / 30 days

In combination, it would cost $54.31 to run this setup for 30 days.

EC2 instances / VMs

A t2.micro instance with 1 vCPU and 1 GB f memory for each Caddy regional instance

- Price: $0.0116/hour on-demand * 720 hours (30 days) = $8.35

There's no need for a Load Balancer in front of the EC2 instances, as Global Accelerator can directly use them

- Price: $0

In combination, it would cost $8.35 to run this setup for 30 days.

Additional costs are:

AWS Global Accelerator

- Price: $0.025 / hour * 720 hours (30 days) = $18

DynamoDB table

- Price: (5000 reads/day * $0.25/million + 50 writes/day * $1.25/million) * 30 days = $0.0375 reads + 0.001875 writes = $0.04

Lambda function (128 MB / 0.25 sec avg. duration / 5000 req./day)

- Price: ($0.0000166667 / GB-second + $0.20 per 1M req.) = basically $0

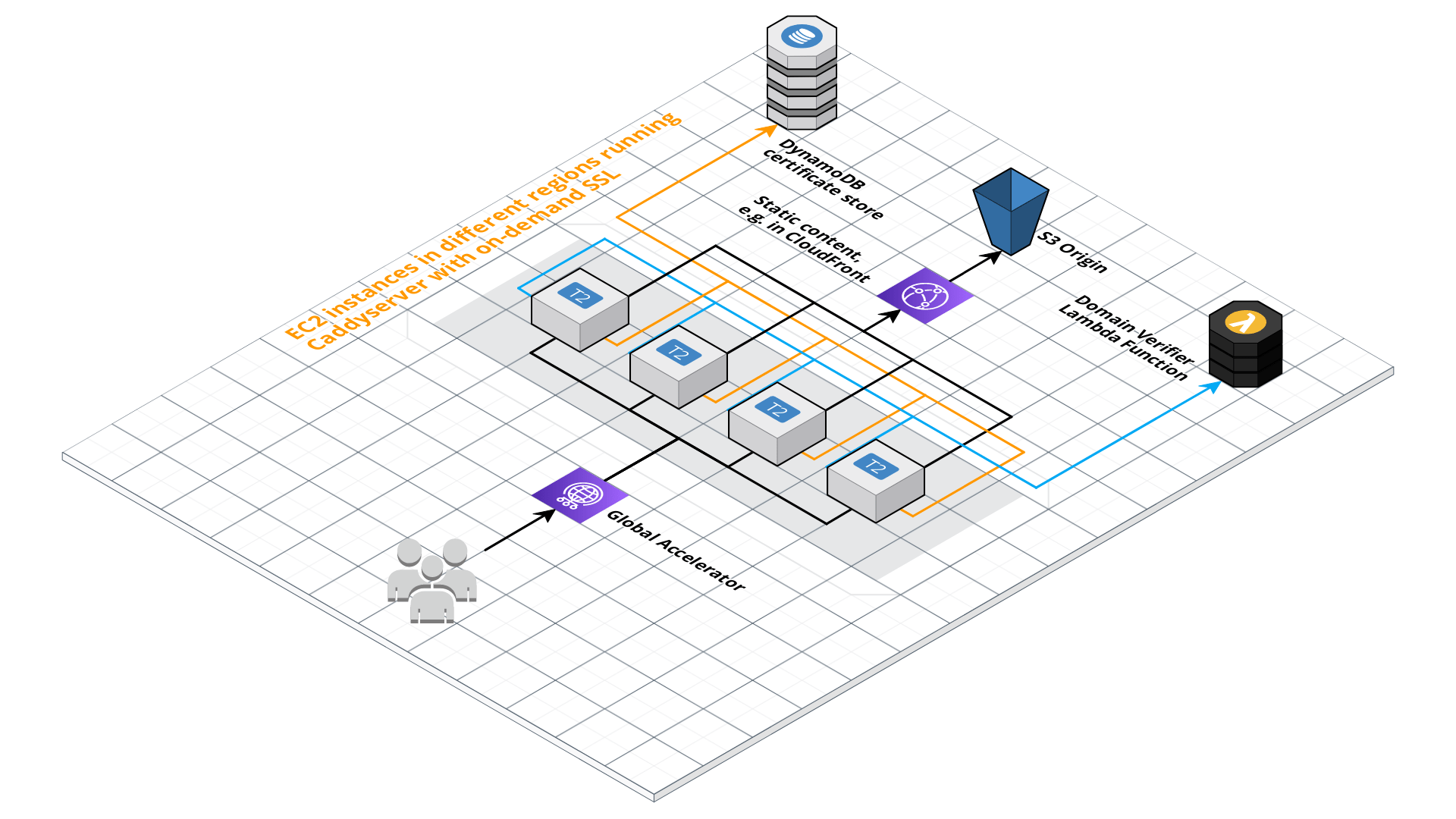

Resulting architecture#

Based on the calculated fixed costs, we decided to use EC2 instances instead of Fargate tasks, which will save us a decent amount of money even for one Caddy instance. The estimated costs for running this architecture for 30 days are $26.39.

As one of our requirements was that we can roll out this infrastructure potentially on a global scale, we need to be able to deploy the EC2 instances with the Caddy servers in different AWS regions, as well as having multiple instances in the same region.

Furthermore, we could use DynamoDB Global Tables to achieve a global distribution of the certificates to get faster response times, but deem it as out of scope for this article.

The final architecture:

Implementation#

To implement the described architecture, we must take several steps. First of all, we must build a custom version of Caddy that includes the DynamoDB module, which then enables us to use DynamoDB as certificate store.

Custom Caddy build#

This can be achieved via a custom build process leveraging Docker images of AmazonLinux 2, as found at tobilg/aws-caddy-build.

build.sh (parametrized custom build of Caddy via Docker)

#!/usr/bin/env bash

set -e

# Set OS (first script argument)

OS=${1:-linux}

# Set Caddy version (second script argument)

CADDY_VERSION=${2:-v2.6.2}

# Create release folders

mkdir -p $PWD/releases $PWD/temp_release

# Run build

docker build --build-arg OS=$OS --build-arg CADDY_VERSION=$CADDY_VERSION -t custom-caddy-build .

# Copy release from image to temporary folder

docker run -v $PWD/temp_release:/opt/mount --rm -ti custom-caddy-build bash -c "cp /tmp/caddy-build/* /opt/mount/"

# Copy release to releases

cp $PWD/temp_release/* $PWD/releases/

# Cleanup

rm -rf $PWD/temp_release

Dockerfile (will build Caddy with the DynamoDb and S3 modules)

FROM amazonlinux:2

ARG CADDY_VERSION=v2.6.2

ARG OS=linux

# Install dependencies

RUN yum update -y && \

yum install golang -y

RUN GOBIN=/usr/local/bin/ go install github.com/caddyserver/xcaddy/cmd/xcaddy@latest

RUN mkdir -p /tmp/caddy-build && \

GOOS=${OS} xcaddy build ${CADDY_VERSION} --with github.com/ss098/certmagic-s3 --with github.com/silinternational/certmagic-storage-dynamodb/v3 --output /tmp/caddy-build/aws_caddy_${CADDY_VERSION}_${OS}

That's it for the custom Caddy build. You don't need to build this yourself, as the further steps use the release I built and uploaded to GitHub.

Reverse Proxy Service#

The implementation of the reverse proxy service can be found at tobilg/global-reverse-proxy.

Just clone it via git clone https://github.com/tobilg/global-reverse-proxy.git to your local machine, and configure it as described below.

Prerequisites#

Serverless Framework

You need to have a recent (>=3.1.2) version of the Serverless Framework installed globally on your machine. If you haven't, you can run npm i -g serverless to install it.

Valid AWS credentials

The Serverless Framework relies on already configured AWS credentials. Please refer to the docs to learn how to set them up on your local machine.

EC2 key already configured

If you want to interact with the deployed EC2 instance(s), you need to add your existing public SSH key or create a new one. Please have a look at the AWS docs to learn how you can do that.

Please also note the name you have given to the newly created key, as you will have to update the configuration of the proxy server(s) stack.

Infrastructure as Code overview#

The infrastructure consists of three different stacks:

A stack for the domain whitelisting service, and the certificate table in DynamoDB

A stack for the proxy server(s) itself, which can be deployed multiple times if you want high (global) availability and fast latencies

A stack for the Global Accelerator, and the according DNS records

Most important parts#

The main functionality, the reverse proxy based on Caddy, is deployed via an EC2 instance. Its configuration, the so-called Caddyfile, is, together with the CloudFormation resource for the EC2 instance, the most important part.

This configuration enables the reverse proxy, the on-demand TLS feature and DynamoDB storage for certificates. It's automatically parametrized via the generated /etc/caddy/environment file (see ec2.yml below). There's a systemctl service for Caddy generated, based on our configuration derived from the serverless.yml, as well.

{

admin off

on_demand_tls {

ask {$DOMAIN_SERVICE_ENDPOINT}

}

storage dynamodb {$TABLE_NAME} {

aws_region {$TABLE_REGION}

}

}

:80 {

respond /health "Im healthy" 200

}

:443 {

tls {$LETSENCRYPT_EMAIL_ADDRESS} {

on_demand

}

reverse_proxy https://{$TARGET_DOMAIN} {

header_up Host {$TARGET_DOMAIN}

header_up User-Custom-Domain {host}

header_up X-Forwarded-Port {server_port}

health_timeout 5s

}

}

ec2.yml (extract)

The interesting part is the UserData script, which is run automatically when the EC2 instance starts. It does the following:

Download the custom Caddy build with DynamoDB support

Prepare a group and a user for Caddy

Create the

caddy.servicefile forsystemctlCreate the

Caddyfile(as outlined above)Create the environment file (

/etc/caddy/environment)Enable & reload the

systemctlservice

Resources:

EC2Instance:

Type: AWS::EC2::Instance

Properties:

InstanceType: '${self:custom.ec2.instanceType}'

KeyName: '${self:custom.ec2.keyName}'

SecurityGroups:

- !Ref 'InstanceSecurityGroup'

ImageId: 'ami-0b5eea76982371e91' # Amazon Linux 2 AMI

IamInstanceProfile: !Ref 'InstanceProfile'

UserData: !Base64

'Fn::Join':

- ''

- - |

#!/bin/bash -xe

- |

sudo wget -O /usr/bin/caddy "https://github.com/tobilg/aws-caddy-build/raw/main/releases/aws_caddy_v2.6.2_linux"

- |

sudo chmod +x /usr/bin/caddy

- |

sudo groupadd --system caddy

- |

sudo useradd --system --gid caddy --create-home --home-dir /var/lib/caddy --shell /usr/sbin/nologin --comment "Caddy web server" caddy

- |

sudo mkdir -p /etc/caddy

- |

sudo echo -e '${file(./configs.js):caddyService}' | sudo tee /etc/systemd/system/caddy.service

- |

sudo printf '${file(./configs.js):caddyFile}' | sudo tee /etc/caddy/Caddyfile

- |

sudo echo -e "TABLE_REGION=${self:custom.caddy.dynamoDBTableRegion}\nTABLE_NAME=${self:custom.caddy.dynamoDBTableName}\nDOMAIN_SERVICE_ENDPOINT=${self:custom.caddy.domainServiceEndpoint}\nLETSENCRYPT_EMAIL_ADDRESS=${self:custom.caddy.letsEncryptEmailAddress}\nTARGET_DOMAIN=${self:custom.caddy.targetDomainName}" | sudo tee /etc/caddy/environment

- |

sudo systemctl daemon-reload

- |

sudo systemctl enable caddy

- |

sudo systemctl start --now caddy

The Global Accelerator CloudFormation resource wires the EC2 instance(s) to its kind-of global load balancer. This is then referenced by the dns-record.yml, which assigns the configured domain name to the Global Accelerator.

Resources:

Accelerator:

Type: AWS::GlobalAccelerator::Accelerator

Properties:

Name: 'External-Accelerator'

Enabled: true

Listener:

Type: AWS::GlobalAccelerator::Listener

Properties:

AcceleratorArn:

Ref: Accelerator

Protocol: TCP

ClientAffinity: NONE

PortRanges:

- FromPort: 443

ToPort: 443

EndpointGroup1:

Type: AWS::GlobalAccelerator::EndpointGroup

Properties:

EndpointConfigurations:

- EndpointId: '${self:custom.ec2.instance1.id}'

Weight: 1

EndpointGroupRegion: '${self:custom.ec2.instance1.region}'

HealthCheckIntervalSeconds: 30

HealthCheckPath: '/health'

HealthCheckPort: 80

HealthCheckProtocol: 'HTTP'

ListenerArn: !Ref 'Listener'

ThresholdCount: 3

Detailed configuration#

Stack configurations

Please configure the following values for the different stacks:

The target domain name where you want your reverse proxy to send the requests to (targetDomainName)

The email address to use for automatic certificate generation via LetsEncrypt (letsEncryptEmailAddress)

The domain name of the proxy service itself, which is then used by GlobalAccelerator (domain)

Optionally: The current IP address from which you want to use the EC2 instance(s) via SSH from (sshClientIPAddress). If you want to use SSH, you'll need to uncomment the respective SecurityGroup settings

Whitelisted domain configuration

You need to make sure that not everyone can use your reverse proxy with every domain. Therefore, you need to configure the whitelist of domains that you be used by Caddy's on-demand TLS feature.

This is done with the Domain Verifier Lambda function, which is deployed at a Function URL endpoint.

The configuration can be changed here before deploying the service.

HINT: To use this dynamically, as you'd probably wish in a production setting, you could rewrite the Lambda function to read the custom domains from a DynamoDB table, and have another Lambda function run recurrently to issue DNS checks for the CNAME entries the customers would need to make (see below).

DNS / Nameserver configurations

If you use an external domain provider, such as Namecheap or GoDaddy, make such that you point the DNS settings at your domain's configuration to those which are assigned to your HostedZone by Amazon. You can look these up in the AWS Console or via the AWS CLI.

CNAME configuration for proxying

You also need to add CNAME records to the domains you want to proxy for, e.g. if your proxy service domain is external.mygreatproxyservice.com, you need to add a CNAME record to your existing domain (e.g. test.myexistingdomain.com) to redirect to the proxy service domain:

CNAME test.myexistingdomain.com external.mygreatproxyservice.com

Passing options during deployment

When running sls deploy for each stack, you can specify the following options to customize the deployments:

--stage: This will configure the so-called stage, which is part of the stack name (default:prd)--region: This will configure the AWS region where the stack is deployed (default:us-east-1)

Deployment#

You need to follow a specific deployment order to be able to run the overall service:

Domain whitelisting service:

cd domain-service-stack && sls deploy && cd ..Proxy server(s):

cd proxy-server-stack && sls deploy && cd ..Global Accelerator & HostedZone / DNS :

cd accelerator-stack && sls deploy && cd ..

Removal#

To remove the individual stacks, you can run sls remove in the individual subfolders.

Wrapping up#

We were able to build a POC for a (potentially) globally distributed reverse proxy service, with on-demand TLS support. We decided against using Fargate, and for using EC2 due to cost reasons. This prioritized costs higher, than running as Serverless as possible. It's possible that, in another setting / environment / experience, you might come to another conclusion, which is completely fine.

For a more production-like setup, you'd probably need to amend the Domain Verifier Lambda function, so that it dynamically looks up the custom domains that are configured e.g. by your customers via a UI, and stored in another DynamoDB table via another Lambda function. Deleting or updating those custom domains should probably be possible, too.

Furthermore, you should then write an additional Lambda function that recurrently checks each stored custom domain if:

The CNAME records point to your

external.$YOUR_DOMAIN_NAME.tld, and updates the status accordinglyPerforms a check via HTTPS whether an actual redirect from the custom domain to your domain is possible